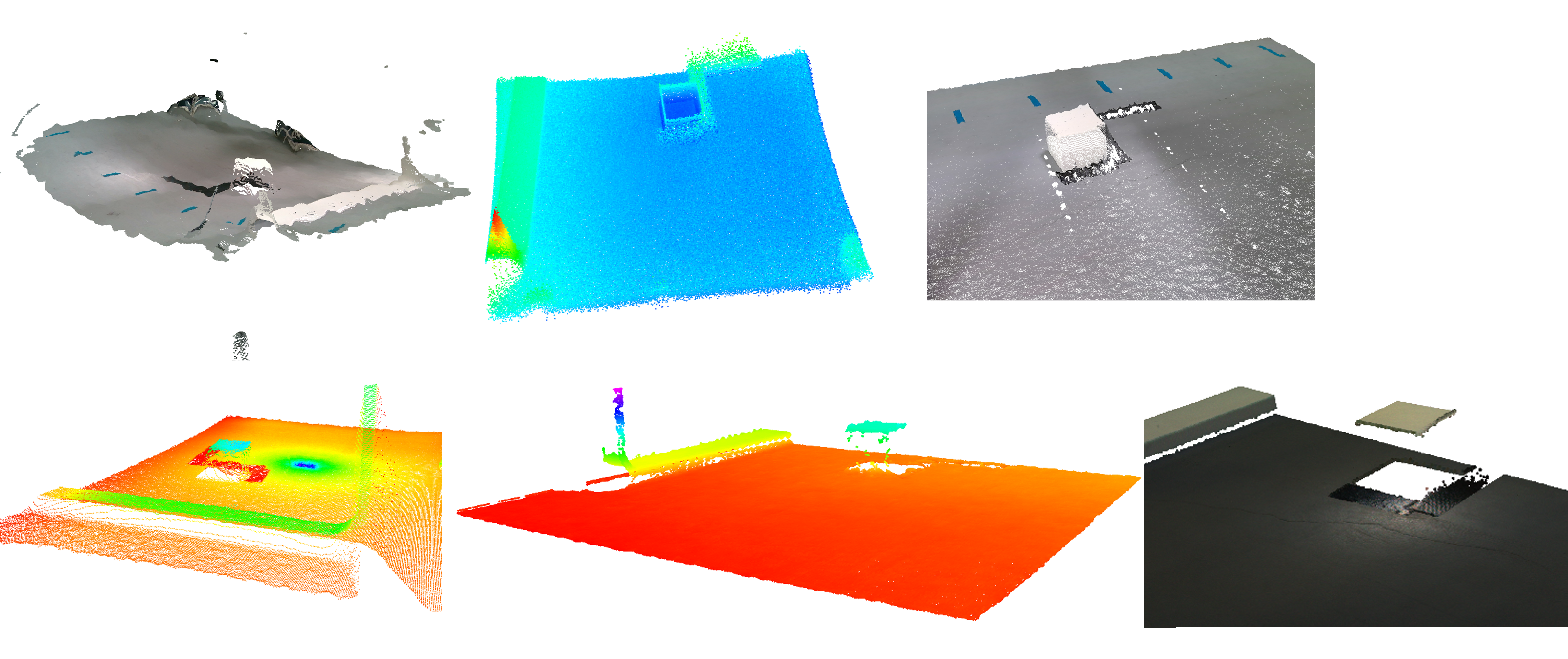

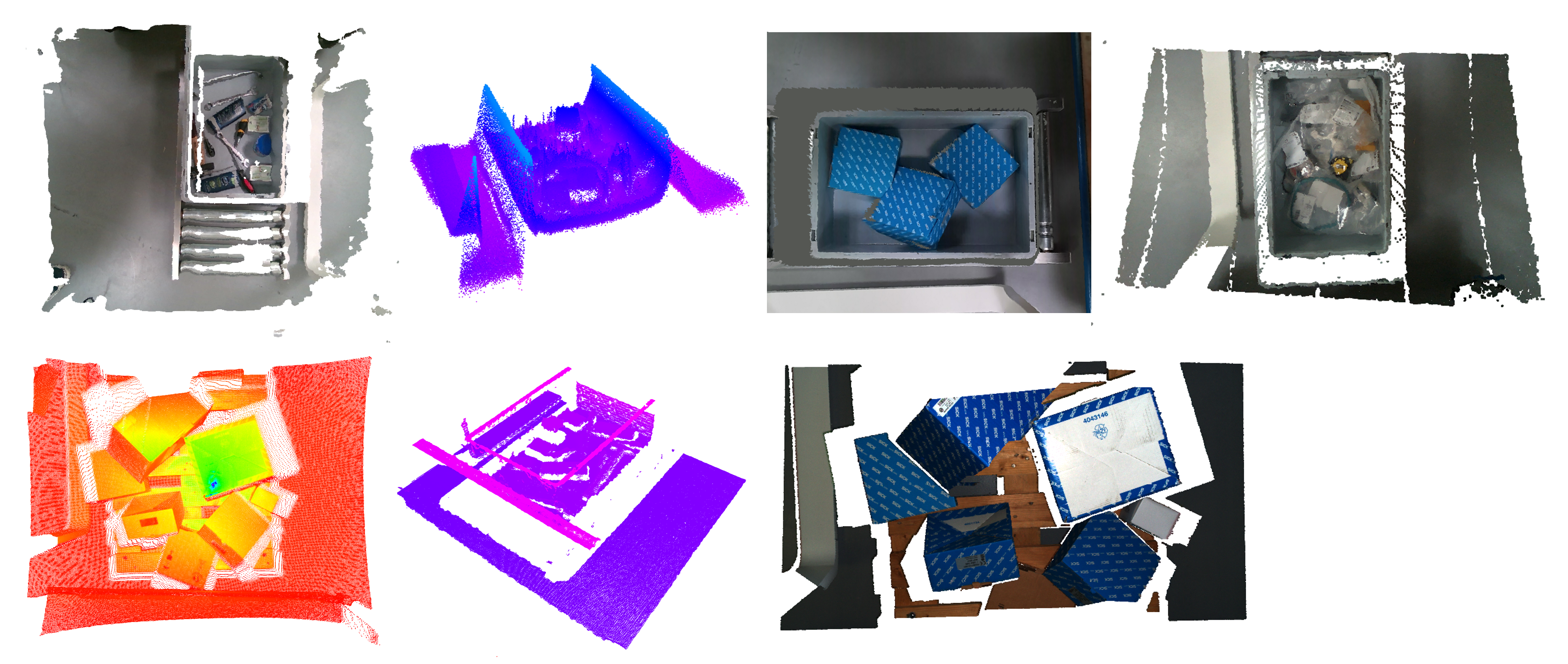

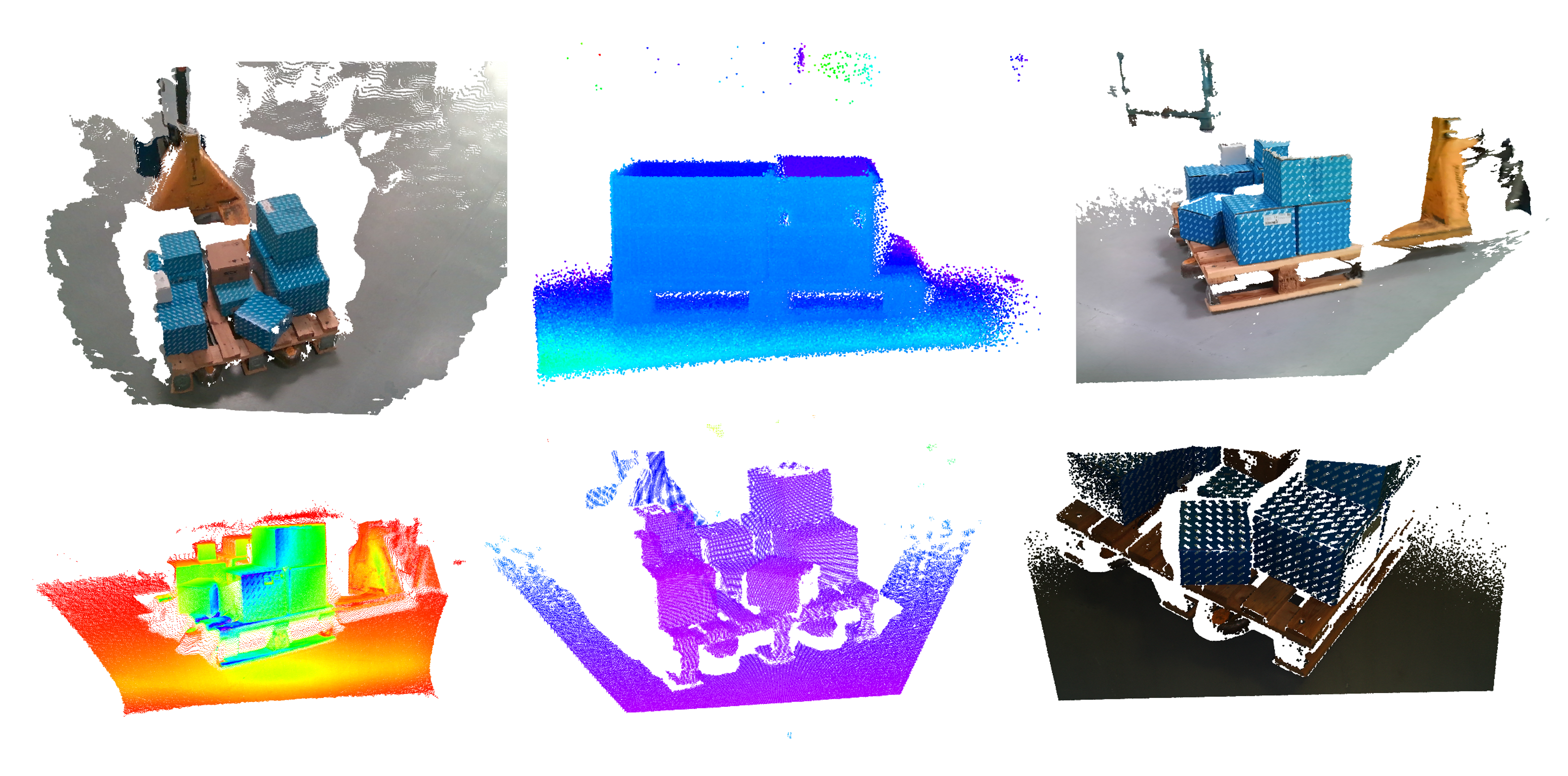

3D sensors capture depth data of objects and environments, making them ideal for object recognition, detection and localization in three-dimensional space. As different 3D sensors have different characteristics such as price, technology used, maximum range and quality of the data generated, a calibrated and synchronized data set was recorded at Fraunhofer IML for logistic scenarios and for evaluation purposes. A list of the sensors used can be found within the table below.

Sensors from the paper

| Intel RealSense D455 |

| P+F SmartRunner Explorer 3-D |

| Azure Kinect DK |

| Intel RealSense L515 |

| Sick T-mini |

| Sick Visionary-S |

| Zivid Two |

Fraunhofer Institute for Material Flow and Logistics IML

Fraunhofer Institute for Material Flow and Logistics IML